前言

最近打算部署一个属于自己的 ChatGPT 网页服务,顺便提升一下自己写提示词的能力,于是便有了下面这篇文章。

发号施令 26 条准则

Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4

指令原则大解锁!26条Prompt黄金法则,精准提问,显著提升ChatGPT输出质量!

如何看待张同学投稿论文忘记删除GPT痕迹, 出现Certainly, here is a……?

Overview

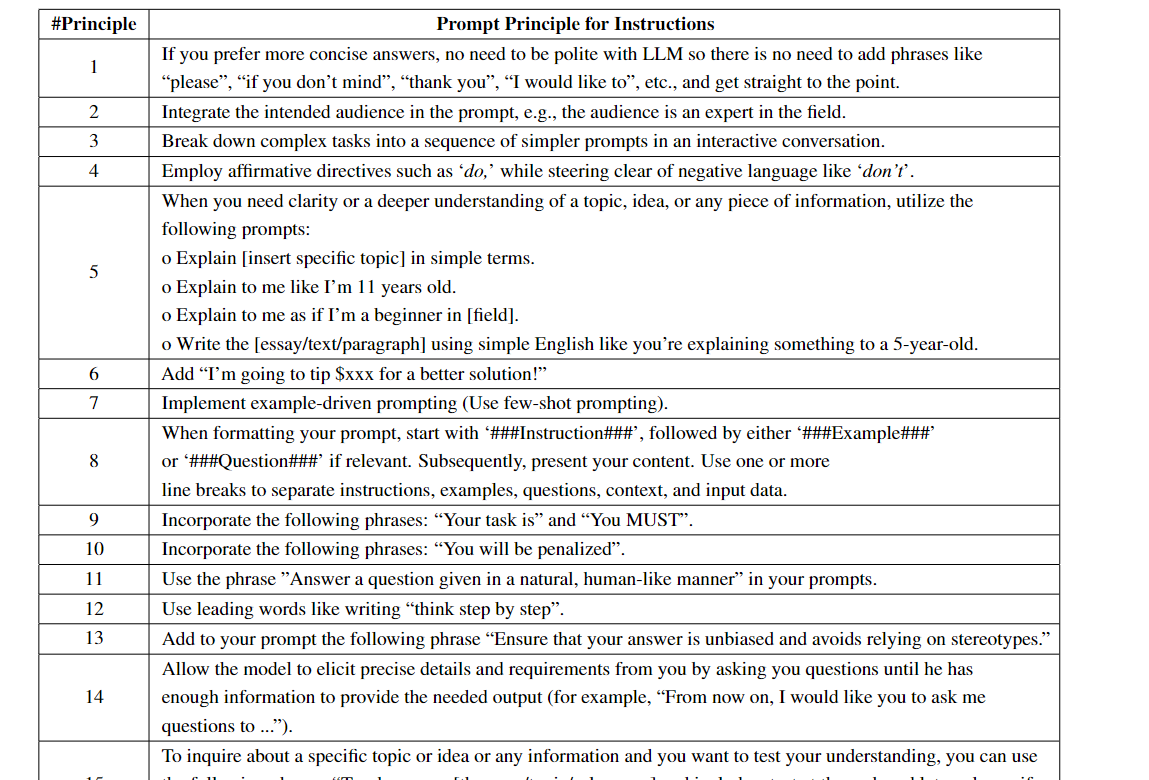

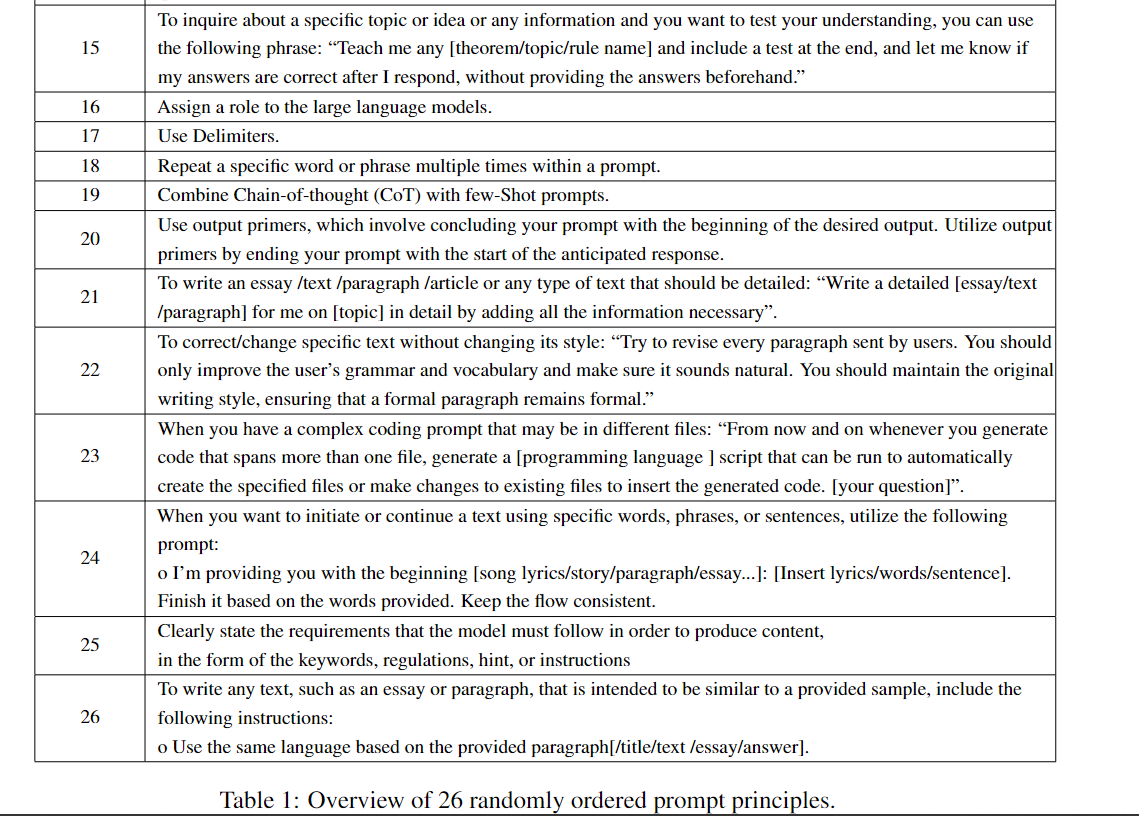

The overview of principles is presented in Table 1. According to their unique nature, we group them into five categories as in Table 2: (1) Prompt Structure and Clarity, e.g., integrate the intended audience in the prompt such as the audience is an expert in the field; (2) Specificity and Information, e.g., Add to your prompt the following phrase “Ensure that your answer is unbiased and does not rely on stereotypes.”; (3) User Interaction and Engagement, e.g., Allow the model to elicit precise details and requirements from you by asking you questions until he has enough information to provide the needed output “From now on, I would like you to ask me questions to…”. (4) Content and Language Style, e.g., No need to be polite with LLM so there is no need to add phrases like “please”, “if you don’t mind”, “thank you”, “I would like to”, etc., and get straight to the point; (5) Complex Tasks and Coding Prompts, e.g., Break down complex tasks into a sequence of simpler prompts in an interactive conversation.

Design Principles

In this study, a number of guiding principles are established for formulating prompts and instructions to elicit high-quality responses from pre-trained large language models:

Conciseness and Clarity: Generally, overly verbose or ambiguous prompts can confuse the model or lead to irrelevant responses. Thus, the prompt should be concise, avoiding unnecessary information that does not contribute to the task while being specific enough to guide the model. This is the basic principle guidance for prompt engineering.

Contextual Relevance: The prompt must provide relevant context that helps the model understand the background and domain of the task. Including keywords, domain-specific terminology, or situational descriptions can anchor the model’s responses in the correct context. We highlight this design philosophy in our presented principles.

Task Alignment: The prompt should be closely aligned with the task at hand, using language and structure that clearly indicate the nature of the task to the model. This may involve phrasing the prompt as a question, a command, or a fill-in-the-blank statement that fits the task’s expected input and output format.

Example Demonstrations: For more complex tasks, including examples within the prompt can demonstrate the desired format or type of response. This often involves showing input-output pairs, especially in “few-shot” or “zero-shot” learning scenarios. Avoiding Bias: Prompts should be designed to minimize the activation of biases inherent in the model due to its training data. Use neutral language and be mindful of potential ethical implications, especially for sensitive topics.

Incremental Prompting: For tasks that require a sequence of steps, prompts can be structured to guide the model through the process incrementally. Break down the task into a series of prompts that build upon each other, guiding the model step-by-step. Also, prompts should be adjustable based on the performance of the model and iterative feedback, i.e., it needs to be well prepared to refine the prompt based on initial outputs and model behaviors. Moreover, prompts should be adjustable based on the performance and response of the model, and iterative human feedback and preference.

Finally, more advanced prompts may incorporate programming-like logic to achieve complex tasks. For instance, use of conditional statements, logical operators, or even pseudo-code within the prompt to guide the model’s reasoning process. The design of prompts is an evolving field, especially as LLMs become more sophisticated. As researchers continue to explore the limits of what can be achieved through prompt engineering, these principles will likely be refined and expanded.

26 项原则中文翻译

- 与LLM交流时,无需使用礼貌性语言,如“请”、“如果你不介意”、“谢谢”等,直接陈述要点。

- 在提示中整合预期的听众,例如“听众是该领域的专家”。

- 将复杂任务分解为一系列更简单的提示,并通过互动式对话进行。

- 使用肯定的指令,如“做”,避免使用否定语言,如“不要”。

- 当你需要清晰理解某个主题、想法或任何信息时,使用以下提示:简单地解释[具体主题]。像我是11岁的孩子一样向我解释。像我是[领域]新手一样向我解释。使用简单的英语写[论文/文本/段落],就像你在向5岁的孩子解释一样。

- 添加“I’m going to tip $xxx for a better solution!”(我会给xxx小费以获得更好的解决方案!)。

- 实施示例驱动的提示(使用少数示例提示)。

- 在格式化提示时,首先使用‘###Instruction###’,然后是‘###Example###’或‘###Question###’(如果相关),然后呈现内容。使用一个或多个换行符来分隔指令、示例、问题、上下文和输入数据。

- 加入以下短语:“Your task is”(你的任务是)和“You MUST”(你必须)。

- 加入以下短语:“You will be penalized”(你将受到惩罚)。

- 在提示中使用短语“Answer a question in a natural human-like manner”(以自然的人类方式回答问题)。

- 使用引导性词汇,如写“think step by step”(逐步思考)。

- 在你的提示中添加以下短语:“Ensure that your answer is unbiased and does not rely on stereotypes”(确保你的回答是无偏见的,不依赖于刻板印象)。

- 允许模型通过向你提问直到获得足够的信息来提供所需输出,例如“From now on I would like you to ask me questions to…”(从现在开始,我希望你向我提问,直到…)。

- 要了解特定主题或想法或任何信息,并且你想测试你的理解,你可以使用以下短语:“Teach me the [Any theorem/topic/rule name] and include a test at the end but don’t give me the answers and then tell me if I got the answer right when I respond”(教我[任何定理/主题/规则名称]并在最后包括一个测试,但不要给我答案,然后在我回答时告诉我是否正确)。

- 为大语言模型分配一个角色。

- 使用分隔符。

- 在提示中多次重复特定单词或短语。

- 结合思维链(CoT)与少数示例提示。

- 使用输出引导器,它涉及以期望输出的开始结束你的提示。通过以预期响应的开始结束你的提示来使用输出引导器。

- 要编写详细的[论文/文本/段落/文章]或任何需要详细的文本类型:“为我详细写一篇关于[主题]的详细[论文/文本/段落],添加所有必要的信息”。

- 要更正/更改特定文本而不改变其风格:“尝试修改用户发送的每个段落。你只应该改善用户的语法和词汇,确保它听起来自然。你不应该改变写作风格,例如将正式段落变得非正式”。

- 当你有一个可能涉及不同文件的复杂编码提示时:“从现在开始,每当你生成跨越多个文件的代码时,生成一个[编程语言]脚本,可以运行以自动创建指定的文件或对现有文件进行更改以插入生成的代码。[你的问题]”。

- 当你想使用特定单词、短语或句子开始或继续文本时,使用以下提示:我为你提供了开始[歌词/故事/段落/论文…]:[插入歌词/单词/句子]。根据提供的词汇完成它。保持流畅一致。(1)清楚地陈述模型必须遵循的要求,以产生关键词、规则、提示或指令形式的内容。(2)要编写任何文本,如论文或段落,其内容与提供的示例类似,包括以下指令:(3)请根据提供的段落[/标题/文本/论文/答案]使用相同的语言。

- 明确陈述模型必须遵循的要求,以便根据关键词、规则、提示或指令产生内容。

- 要写任何文本,如文章或段落,且内容与提供的样本相似,请包含以下指示:“请根据提供的段落[/标题/文本/文章/答案]使用相同的语言基础。”

提示工程指南

提示工程(Prompt Engineering)是一门较新的学科,关注提示词开发和优化,帮助用户将大语言模型(Large Language Model, LLM)用于各场景和研究领域。 掌握了提示工程相关技能将有助于用户更好地了解大型语言模型的能力和局限性。

研究人员可利用提示工程来提升大语言模型处理复杂任务场景的能力,如问答和算术推理能力。开发人员可通过提示工程设计、研发强大的工程技术,实现和大语言模型或其他生态工具的高效接轨。

提示工程不仅仅是关于设计和研发提示词。它包含了与大语言模型交互和研发的各种技能和技术。提示工程在实现和大语言模型交互、对接,以及理解大语言模型能力方面都起着重要作用。用户可以通过提示工程来提高大语言模型的安全性,也可以赋能大语言模型,比如借助专业领域知识和外部工具来增强大语言模型能力。

基于对大语言模型的浓厚兴趣,我们编写了这份全新的提示工程指南,介绍了大语言模型相关的论文研究、学习指南、模型、讲座、参考资料、大语言模型能力以及与其他与提示工程相关的工具。